Including 41 detailed tips, advice from top conversion experts, and plenty of case studies. This article will skyrocket your A/B testing skills.

This is not a post about what you should test. This is about how to execute the perfect test.

A/B testing is the most widely used method for improving conversion rates. Tools like VWO, Adobe Test & Target, and Optimizely makes the practice easier than ever but is it really as simple as it looks?

The answer is no. Sure you can start using an A/B testing tool anytime and follow "best practices" found online, but the chances of success will be quite low. A/B testing is a complete process that requires meticulous analysis and understanding of the website you're testing. It's science from A to Z.Instead of compiling a list of what to test, which are often useless because every website is different, as will be every test, I decided to create this list of detailed best practices when performing A/B tests.From planning to execution, everything is covered. I hope you will learn new things, and please leave me your feedback in the comments below. If there's one I missed, let me know and I'll be sure to update the list.Now, let's get started:

1. Plan.

I recently wrote an article on the importance of planning your usage of Google Analytics, and how to create a planning framework for proper measurement. The same framework can, and should, be applied to your A/B testing experiments. In other words; if you don't plan, you're testing blindly and will have trouble measuring outcomes. I recommend the following planning structure:

1. What is your company's unique value proposition, it's main objective?

2. What are its secondary support objectives? These are the objectives that you have to work on in order to make your promise, your UVP possible

3. How is your website tied to these objectives? What are the website's goals that will contribute to the upper two? Is it to sell a product? Raise awareness? Get contact information of potential leads?

4. Find your key performance indicator. How are you going to measure your website's goals? What are the important metrics? Your sales conversion rate? Numbers of signups? Average order value? Your KPIs will be the goals and metrics on which you should focus on improving through A/B testing.

5. Set your target metrics. How do you determine if your KPIs are successful or not, or how do you know if 100 signups is good for your company? That's why you set targets. Targets are thresholds that you must reach in order to determine if a test is successful. Targets are the goals of your KPIs.

6. Determine the segments. It is likely that you will do pre-test and post-test segmentations if you're really serious about A/B testing, so instead of making them up on the fly, you have to plan and ensure they relate perfectly to your targets, KPIs and key business objectives.Of course, that's the initial planning framework for proper measurement, but don't forget that you must also analyze your data to find exactly what to test and generate hypotheses.I recommend taking a look at ConversionXL's incredible, in-depth post on planning for A/B testing for further reading.

2. Use the scientific method.

A/B testing is a science experiment, and it requires a refined process. The scientific method's process includes planning, which I explained in the previous tip, but again, since it's a process, planning is just one part of it. Using the scientific method, you must first ask yourself a question that addresses a problem, such as "Why is my conversion rate lower than the industry's average".

Next, you must conduct research to understand your problem using your analytics tools. Usability testing, using heatmaps, identifying your visitors' navigational patterns and potential roadblocks is all part of the process. Once you have identified the potential sources of the problem, you must formulate an hypothesis, in this case it could be: "Visitors are not converting as well as my competitors' websites because the navigation is too complicated; thus, have a hard time finding what they're looking for".

Using that hypothesis, you create a test, analyze the outcome, and report the results. Repeat and refine.

3. Test based on data (also a vital step of the scientific method).

Look in your analytics and closely inspect your visitor’s conversion path and your goal funnels. Is there a place where the exit rate is abnormally high? Are visitors at the end of a checkout process returning to a previous stage resulting in cart abandonment? Are visitors finding the information they need in order to make their purchase? Find the biggest potential roadblocks in your results and test them for possible improvement.Identifying areas in your funnel that gets the most drop-offs (red arrows in the image below) is a good way to start looking for areas that could be improved.

“Doing proper user research and interviews is super important for coming up with winnable hypotheses. Very often people start A/B testing their ideas, skipping the user research component altogether. There is a reason VWO has integrated heatmaps and surveys with A/B testing. We want our customers to spend the majority of their time understanding their users and problems they face on the website, and only then start A/B testing.” - Paras Chopra, Founder of VWO

4. Use user testing tools to gain behavioral insights.

Getting feedback from your visitors and religiously digging into your metrics is not the only thing you should do if you want to have deep insights and the power to plan and execute your tests better. It's also important to know how your visitors interact with your website. Yes, you can ask them, but what they say and what really happens are two entirely different things. It's hard for a visitor to really recall how they went through your website and what they were thinking all along, so instead of asking, making use of user testing tools is one of the best way to know how people behave on your website.

Being able to see your customers' behaviors with usability tests will help you understand the problems, hiccups, and any barriers preventing conversion. With these insights, it's much easier to know what to potentially improve and test using split experiments.Even Evernote has been able to increase user retention by 15% with user testing. Some recommended user testing tools: Clicktale, UserTesting, UserZoom, FiveSecondTest

5. Always track Macro conversions

The goals of your A/B tests won't always be to improve your website's ultimate objective (also called a Macro conversion), sometimes you might want to improve your secondary goals (Micro conversions), such as your newsletter signups. There's no problem in doing this if you think it's a priority for your website, but what you don't want to forget is to keep tracking your macro conversion when you're optimizing for the micro. Now - why should you do this? Let's say we're optimizing an e-commerce store and you have a newsletter sign up form on the product pages. You create an A/B test to test your hypothesis, which is that your sign up form is not visible enough; therefore, it should be brought closer to the product's description.

When the test is over, you notice that your newsletter sign ups have increased - success? Yes if your macro conversion rate stayed the same or increased, but maybe not if you have not kept tracking the macro. Here's what could have happened: positioning the newsletter form high on the product page increased your newsletter signups, but being closer to the critical elements such as the "add to cart" button, less products have been added to the cart due to distraction; thus resulting in less sales. See what happened?

Always keep track of all your important micro and macro conversions when running experiments.

6. Don’t end your tests too fast.

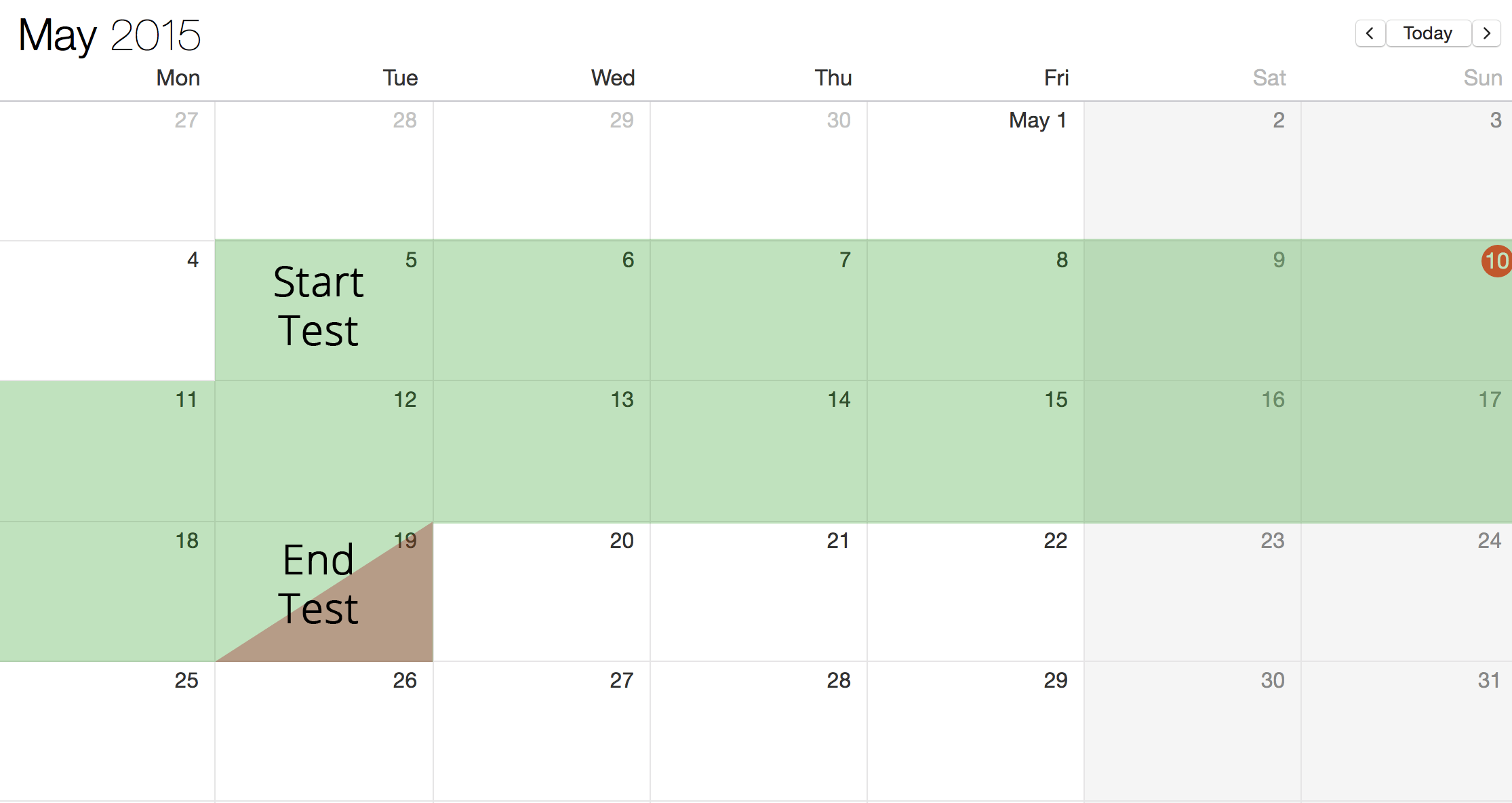

Ending tests too fast is one of the most common mistakes. Popular A/B testing tools will give you test results even before the test is over, and thinking you are saving time and money by stopping a test as soon as it has reached statistical significance, you're scrapping the whole test and false positives is what you'll get. False positives won't help you improve your website in any way; in fact, it might even decrease your conversion. Each of your test should last between two to three business cycles. Usually, the less visitors you have, the longer it will take to determine the winning test.Use VWO’s A/B Split and Multivariate Test Duration Calculator to help you determine how long your test should last.

7. Try to target a minimum of 100 conversions for each variation before you end it.

Peep Laja of ConversionXL has often said he aims for a minimum of 100 conversions before considering ending a test; and the more the better. Although he says there is no magic number as to when to end tests, 100 seems like a good minimum. This ensures you can be confident in the success of a test and reduces the chances of having false positives. Ending a test too early could result with a “winning” test decreasing your conversion rate. In addition, the more conversions you get, the more data you will have access when drilling down in your analytics.

Not having enough data will lead to a point where your segments will be too small for in-depth, useful analysis.It is well known that A/B testing is principally for high-traffic sites, but of course, for smaller sites with less traffic and less conversion this will be a challenge. Be sure to use Optimizely's sample size calculator before starting any tests.

8. Reach a minimum of 95% statistical significance.

Running an A/B test without paying close attention to statistical confidence is worse than not running a test at all. As mentioned above, you should always reach a minimum of 95% of statistical significance before concluding an experiment, even if your criteria and planned length have been met - otherwise you're failing your tests. Here's an extract on avoiding false positives through statistical significance from Dynamic Yield's excellent article on the topic: "To avoid “false positive” mistakes, we need to set the confidence level, also known as “statistical significance.” This number should be a small positive number often set to 0.05, which means that given a valid model, there is only a 5% chance of making a type I mistake. In plain words, there is a 5% chance of detecting a difference in performance between the two variations, while actually no such difference exists (a 5% chance of mistake). This common constant is commonly referred to as having “>95% Confidence".

Bottom line: the higher your level of statistical significance, the less likely you are to conclude that your variation is a winner when it is not.

9. Don’t solely rely on statistical significance bars of testing tools.

Statistical significance is important, one should never end a test before it reaches at least 95%, and not understanding how it works and ending a test based solely on its results is dangerous.Make sure all your criterias for conversions and planned length of the test are met before prematurely ending a test. For instance, if you run a test for a week and your sample size is 200, which means that 100 visitors will be shown variation A and the other 100, variation B, your A/B testing tool could show you a statistical significance of 95% or more, but that doesn’t mean you should end your test; in fact, it’s probably lying to you. The reason? Your sample size is more than likely too small to expect any truth in the results, and the test will not have run enough business cycles to prove the change is consistent.Use a sample size calculator and a statistical significance calculator to have an idea of if your test is valid or not. Of course, don't forget to use good ol' logic too.

10. Pay attention to the blend of traffic going through your A/B tests.

Remember that some visitors are new to your site, while others are returning. Some visitors will come from Google, some from your Facebook page, others from your email marketing campaigns. All those subset of visitors behave and convert differently on your site due to the context and intent of being on your site. Look at the big picture. With a big enough sample size it’s easy to create segments into Google Analytics and see the all the details.

11. Have a solid hypothesis before testing.

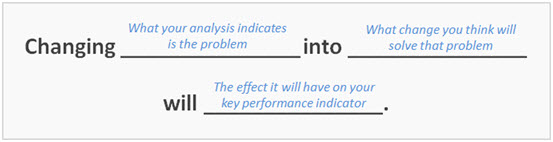

After finding the problem you want to solve through split testing, you need to form a solid hypothesis that explains the problem and the potential solution, and the anticipated result. A good hypothesis will be measurable; therefore testable through A/B or multivariate testing.A trick to formulate a good hypothesis is to follow MarketingExperiment's formula:

12. Avoid testing the little details first.

Changing a font or the color of a button might have increased the conversion rate of many according to your favorite a/b testing case studies, but if you’re just starting out, there are more important elements that you should focus on. Take a look at the table below; it reflects a site with a monthly total of 10,000 visitors and a conversion rate of 5%.

Source: Techcrunch, Prevent Analysis Paralysis By Avoiding Pointless A/B Tests. Small changes can definitely increase conversion rates, but as shown above, if you have a site with a modest amount of traffic, small increments to your conversion rate will require a very large sample size, and take an absurd amount of time to reach a conclusive test at 95% significance. Don't forget that running multiple tests at the same time is rarely recommended, so the longer your tests take to become conclusive, the fewer opportunities you have to improve.A study by MarketingSherpa shows that the headline, images, copy and form layout were the elements that were most consistently having significant impact towards improving conversion rates.

This is why your headline, subheadline, page layout, navigation, your call to actions, images and videos are some of the first elements to test. Focus on the elements that really matters, those with big impact that have the potential to drive you more sales. This will provide you with a good start which is much more likely to yield bigger and better results than testing, let’s say, Arial VS Times New Roman.

13. Use multivariate tests if you have a lot of changes with different combinations to test.

A multivariate test will enable you to experiment the impact of a combination of multiple elements on your conversion rate. It may sound complicated, but at the base, all you're doing is breaking down several variables into a number of A/B tests. The benefit is that once the test is over, you will know what combination of which element will lead to a better converting page. For example, Headline 1 combined with Button 2 and Image 2 might perform better that if you would have combined Headline 2 with Button 2 and Image 1. When performing multivariate tests your traffic will be split into quarters, sixths, eighths, or in even smaller segments in order to distribute visitors equally amongst all variations. That means a high number of monthly visitors is needed for such tests to be successful. Here's an illustrated explanation in case you're still confused:

Source: dynamicyield.com[/caption]

14. Ask visitors for feedback.

Your analytics tools are good at showing your visitors' path to conversion, most visited pages, and all the other metrics you care about, but you can only go so far with the information they provide.

How do you know if your visitors found what they are looking for? How do you know the specific reason why they're on your website? And how can you find out why they're not purchasing your products or signing up to your service?

Many people think this information is extremely difficult to get, but the truth is that they're overthinking it: just ask the visitors. The best way to gain insights and feedback from people visiting your site is by using a tool like Qualaroo (pictured below) or by using your email marketing software’s (Aweber, Mailchimp, ...) autoresponders to ask visitors what they think about your site. This will help you figure out what to test for maximum impact.

Gaining insights using this simple method of asking your visitors will enable you to pinpoint more specifically what you should test. While collecting feedback, if you notice certain patterns or trends in the responses, you will be able to take that information, make changes accordingly, and test it to see if your change has answered the questions or objections brought up in the feedback.With the additional data gathered by surveying visitors, Kingspoint has been able to fix a problem on their website that could give them up to $60,000 in additional revenue.

15. Use custom variables.

A/B testing tools makes running split test easy, but when you need to analyze deeper, use advanced segments to know the information you gotta know, Google Analytics will be your best friend. So what do you do? You must integrate your tests within Google Analytics to track everything there too. Most popular A/B testing tools such as VWO and Optimizely can be integrated easily, so don't sweat. But what if you're using other tools that doesn't integrate directly? Setup custom variables manually.

16. Use Kissmetric’s Get Data Driven A/B Significance Test tool.

I mentioned that 95% in statistical significance should be the minimum you need before completing a test. In order to double test your data, this handy little tool will help you know know if your changes really helped increase conversions.

17. Utilize insights from site search.

The objective of internal search is to quickly enable your visitor to find something they're looking for on your website. Big retailers like Amazon prominently features their search bar because visitor-generated queries provide such deep insights about what users want to find on your website.When you enable site search within Google Analytics, you will be able to gather data on the exact search terms typed in by your visitors. This will allow you to identify search patterns and possibly missing information so you can optimize for your customer’s needs. Knowing what your visitors are looking for on your site becomes extremely handy when planning your A/B tests.

18. Conversion trinity is important, don’t ignore it.

Optimization expert Brian Eisenberg calls his magic formula the "conversion trinity". He mentions on his website that every successful test, ad, or landing page improvement has come from improving one or more of the trinity factors. He describes conversion trinity as 3 things: relevance, value, and call to action:

- Relevance: Is your homepage or landing page relevant to the visitor’s wants or needs? Are you staying consistent by maintaining the scent?

- Value: Is the value proposition answering all the questions a visitor will be asking? Will your solution provide them with the right benefits?

- Call to action: Is it clear what the visitor needs to do next? What is the most important action you want them to take, and can they perform it with confidence? or are they confused?

19. Use A/B testing tools like VWO or Optimizely.

Coding a whole new page with changes, publishing and then setting up the test in your analytics can take lots of unnecessary time when it's so simple to use ready-to-use testing tools. Coding your own test can also result with errors in the code that could potentially skew the results.Use established tools dedicated to a/b testing for a quick, simple and more reliable way of creating, organizing and performing tests. They're also the easiest way to integrate your test within Google Analytics, and their dashboards comes in pretty handy when you need an overview of your tests.

20. Focus on the 20% that will bring you 80% of the results.

We've talked about the importance of prioritizing changes that have the highest potential for impact, and even by prioritizing, there's an infinite amount of elements that could potentially be tested. That being said, a trick to narrow your choices down even further is to use what is called the Pareto Principle. The Pareto Principle, often referred as the 80/20 rule, means that 20% of the effort will generate 80% of the results, just as it's often the case that 20% of customers generate 80% or more of a company's revenue. This principle can easily be applied to your website: if your site has 10 pages, but 2 of your pages are getting 80% of the total traffic, focus on those two pages. Then select the 20% of that page that you believe produces the most conversion, formulate your hypothesis, and test it!

21. Don’t get fooled by design.

"Good design" isn't pretty design. Sure, a beautiful design can convert, but setting out with the goal of a beautiful design is a mistake. High-converting pages use design principles to create an experience that encourages interaction.

It's critical to understand that copy informs design, not the other way round. Information hierarchy is about telling your story in the right order so the information is consumed and remembered.

Visual hierarchy uses principles such as affordance (visual clues in an object’s design that suggest how we can use it) to make it more obvious what you should be doing. Ghost buttons are a design trend, but they don't appear to be clickable, so as a design treatment they are relying on a shift in visual style as opposed to being designed with conversion in mind.

Other design principles (my list totals 23) include dominance, attention, encapsulation, and overlapping. By understanding the underlying principles, you are better able to make more informed design decisions based on more than beauty." - Oli Gardner, Unbounce Co-Founder

In addition to that awesome quote by Oli, I'll add that redesigns are also expensive and extremely time-consuming; companies expecting more conversion from a refreshed look are possibly setting themselves up for disappointment. In this era where designers are addicted to parallax effects and scrolling, fancy image heavy websites, and slow loading homepages, usability is often forgotten. Furthermore, in the case of a website overhaul, the whole experience that repeat customers are used to is changed, which can destabilize the loyal visitors amongst your audience for a certain while.

British retailer Mark & Spencer is a prime example of a website redesign gone wrong. The overhaul lead to an 8% decrease in online sales including countless frustrated customers.

Now, don't get this all wrong. I'm not saying you shouldn't revamp your website or that a pretty website does not convert. As a matter of fact, a nice visual interface will contribute to your company's trust factor; thus possibly helping your conversions. However, what I am saying is that the best and only way to decide what to test is to use data. And if you're planning to do a website overhaul, focusing on usability is key, and often many small steps to revise the existing site could be a better option - otherwise, remember you're starting the whole experience from scratch.

“Don’t make something unless it’s both necessary and useful. But if it is both necessary and useful, don’t hesitate to make it beautiful.” While aesthetic design and usability are inextricably interlinked, usability is most important to good design." - Josh Porter

22. Test pricing internally.

If you are reluctant to A/B test your prices because you are scared it might affect your revenue, an internal test can be performed by changing the price on the product page while keeping the original price on the checkout page. If there’s no change in your ‘add to cart’ (or other CTA) clicks, you can implement the real test and enjoy extra revenue.

Be aware that the price difference should never vary by too much, as it could create confusion and ultimately decrease conversions. Of course, you should only do it for test purposes, but it is still necessary to be careful and pay close attention to all metrics in order not to miss detecting any unexpected changes.

23. Start testing early.

A/B testing takes a certain amount and effort if you want to see real improvements and run tests correctly. At its basics, it's relatively straightforward: you create a variation of a web page that 50% of your visitors will see, when the test is completed, you implement the winning variation, conversion increases. However, for proper A/B testing and in order to avoid mistakes that could ruin your tests and data, a well-defined plan is required. This means spending a few weeks doing post-test analysis, as well as constantly monitoring your experiments is part of the game.

Startups are often the guilty ones here. The excuse is that they simply don't have time, and I believe this is due to a misprioritization. Imagine you start attributing a greater importance to split tests, and you discover that changing your headline and call to actions contributed to an increase in conversions of 300%. Awesome right? Of course! But remember that without testing, you would never have figured it out. The sooner you begin to test your site, the sooner you can eliminate ineffective business decisions and design choices based on untested hypothesis, as well as uncover the variations of content that increases your sales.

24. Test different things for the same hypothesis.

Let's assume you came up with the following hypothesis based on feedback gathered from visitors: "Visitors do not want to send us their personal information due to the website having no reputation. Adding social proof such as client logos will increase trust resulting in more leads".

Now you decide to create a split test experiment with a variation of your home page that includes client logos. After the test is conclusive, you notice that adding logos made no difference and your original copy still won. At this point, should you decide that logos won't help? Probably not. In this case you might want to test different logos, positioning them in a different area, graying them out, and so on. At the end of the day if the hypothesis is invalidated, instead of scrapping it all, you could tweak it slightly to replace logos with client testimonials, and start testing again. You get the point? Never stop testing.

25. Don’t think your tests can’t fail.

In fact, most probably will. The Internet is filled with amazing case studies and success stories, but the truth is that not every hypothesis will be validated, and it’s OK. Remember that failures are rarely showcased, isn't success more exciting? Hence why the number of successful tests you will stumble upon while browsing the web will outweigh failed tests by a vast majority. In general 1 out of 10 tests will fail.In an article posted on VWO’s blog, Noah Kagan the founder of Appsumo mentioned that 1 out of 8 split test created at Appsumo fails. So what do you do if your tests fails? Stay persistent and don’t quit! You're not alone.

26. Segment your data.

To get truly actionable data and understand what’s making some of your experiments fail or succeed, segments are key. Suppose that you are running an experiment that performed poorly and failed, by segmenting your data you might realize that it has performed poorly for a certain part of the traffic, like iPhone users, but that it was a success for visitors coming from an Android device. It’s details like these you’ll miss when you don’t pay close attention to segments, and those are important to know before and after split tests. A few ideas...

27. Segment by source.

You’ll always have visitors coming to your site from different sources such as email campaigns, Facebook, Youtube, from an article they read about you, and so on. So how do you know if your visitors coming from your newsletter buy more than those who come from Facebook? How do you make sure your tests have not failed because most of the visitors coming to your website during your testing period were from your lowest converting source of traffic? Segmenting by source will unlock the answers hidden in the details.

28. Segment by behavior.

The reasons to segment by behavior are similar to why you should segment by source. When you segment by behavior, you will be able to get insights into how different groups of visitors behave differently on a website, and how this affects your test results and conversions. Different visitors have different goals, and behavioral segmentation allows you to identify those and the total impact.

29. Segment by outcome.

This one is a bit different from the last two, but equally as important. When you segment by outcome, you pick the group of visitors that took the ideal path to conversion, or simply the group that you are focusing on. For example, it could be a segment that only includes people who have previously bought a product.

30. Popular A/B tests may not always work for you.

Every website is different, and so is every test. You can spend as much time as you want reading "what to test" articles or "shocking case studies" to find out what to test for your website, but be fully aware that those tips might not work for you as they did in the case studies. Generalizing A/B testing results based on one single case study will likely fail. Your product, traffic sources and visitor's intent, behaviors and profile will be completely different from the websites showcased in examples.

Another reason why not to attribute too much importance on case studies is because you have no clue of the methodologies used in the tests. Sure, X website might have improved their signup rate by 500%, but was their sample size big enough to validate the test? What was the statistical significance? Are the test results skewed? Those are important to assure the soundness of the test, and without that information, it's misguided to blindly replicate the test.

Next time, ask yourself the following when reading a case study:

- What was the initial hypothesis?

- How was the hypothesis generated?

- What was the sample size, and how many conversions went through each variations?

- For how long was the test running?

- What was the statistical significance?

- Was there only one conversion goal observed? What was the impact on other areas of the funnel?

- Was the impact sustainable for a long period of time after the test ended?

To perform a successful test, you have to use what you know about your website and the data you have, not "what worked" for other people.

"One of the most important - and most overlooked - aspects of conversion optimization is understanding your audience. Because although best practices are a good place to start, what works on other websites is not guaranteed to work on yours. You have to know your customers' unique problems and triggers and how to position yourself as their perfect solution. In order to know how to craft the right message to the right person at the right time, you must spend the time getting a deep understanding of your customer -- and what it'll take to convince them to take action. Without that, all other conversion tactics are meaningless." - Theresa Baiocco, Conversion Max

31. Exclude irregular days.

Running a test during a period that includes a holiday can possibly skew your results. It is highly likely that your site’s traffic, the intent, and behaviors of visitors visiting your site will be different during those days and will skew regular patterns. Of course, if you get more sales on a holiday it’s great, but in general, when you’re testing, your goal is to optimize for most days of the year, not for a select few days (unless you’re testing specifically for that).

It's also important that you're aware that other time of the year such as paydays and tax return season could also affect your results. During those times, consumers have an increased spending power that could potentially reduce barriers to buy.

Some possible solutions are to either to avoid testing during major holidays by pausing your tests or to exercise extreme caution and keeping those days in mind when doing your analysis and running your tests.

32. Start and stop your tests on the same weekdays.

“In order to run the perfect test you should always start and stop it on the same week day. I always end my test on the same day after it reaches my threshold for significance. It helps keep each test consistent across the same period of time. The more apples to apple your testing pattern is, the more it will be accurate. And that's the biggest piece of advice I have for running successful tests, long term.” - Alex Harris of Alex Designs

33. Test for the ultimate goal.

Sure you can test your newsletter signup forms and increase your conversion rate on email signups, but if your ultimate goal is to increase your revenue through total order value, focus on the tests that will have direct impact on increasing order value. In this case, you could test ways to upsell during checkout or you could test shipping promotions such as free shipping for orders over $X.

34. Document.

If you’re only using one single tool to do your A/B testing, it’s pretty easy to keep track of previous test, but many of us will use a combination of multiple tools and will perform deeper analysis through segmentation and will dig into the data using other analytical tools. With so much information spread into multiple softwares, always keep a log of all your post-test analysis, hypotheses, targets, test results along with the conclusions from your post-test analysis and impact. This way it will be easy for you to go back and precisely get all the information you need with a simple glance at the document. Ultimately, it will help you plan better.

35. Split your list and emails when doing email A/B tests.

“As for email and A/B testing. That is a completely different animal. The easiest way to get the best results is to split your list and two separate emails. Here you are either testing for opens or clicks. It's tough to test for both goals at the same time. You test open rates with different subject lines. You test clicks with variations in the body content of the email.” - Alex Harris, Alex Designs

36. Be careful when testing only in isolated funnel stage.

In certain cases it can be a good idea to focus on the same elements of the same funnel stage when testing, but you must be careful: if you only optimize one step of the funnel without looking at the complete funnel, it may improve one stage, but the subsequent stages could be made worse and it will become harder to improve the conversion rate of your ultimate goal.

37. Tweak the process continuously.

Time changes, technology evolves, and the laggards will stay behind. So always make sure to tweak and refine your process ensuring it can evolve with the times and your new learnings. As you do more tests, you will learn more about your visitors and how they navigate your website, and ultimately, your process should reflect those changes. Not only will your tests be more efficient, but you will be optimizing your marketing system to become more efficient at identifying areas in need of improvement, at forming your hypotheses, all contributing to a better success rate.

38. Implement.

Now this one might seem obvious, but it did happen in the past that I had conversations with companies who absolutely wanted to improve their website, did a/b tests, but never really considered the implementation of the improvements as a top priority. Ridiculous, I know, but it does happen. If you’re not implementing your findings, you’re not taking your business seriously.

Think about this, you found that by changing the structure of your homepage you can increase your conversion rate by 60%, but you keep delaying the implementation and still trying to figure out how to grow? Every second you’re delaying an implementation you’re pitching money out of the window, plain and simple.

39. Leverage the power of targeting.

Targeting is something people still don’t do often enough when A/B testing, but it’s understandable as it is a little more advanced. Here's how it works: when you find a winning variation for a certain segment during segmentation, you can decide to target that variation to a particular segment.

By doing this you will get multiple different variations of your copy, but each will be optimized respectively for each different types of segments. For example, if someone comes to your site from an email promotion you sent, your site will be targeted and optimized specifically for that person, and for someone coming to your site for the first time, they will see a version that's tailored to first timers.

Targeting creates a much more personalized website experience for your visitors and its potential to increase your conversion rate is immense. Using this method, VWO increased the click through rate (CTR) to their careers page by 149%.

40. A/A test before starting A/B testing could be a waste of time.

The goal of an A/A test is to test your original page against itself through your testing tools in order to see everything is setup correctly and the numbers line up. The problem with this practice is that it takes a lot of time that could be used to run an A/B test. With only one major test at a time being recommended, it's wiser to use your testing time on real experiments as often as possible. I'm not saying to not test your experiments at all, what I am saying is that there are potentially better ways to accomplish the same goal using other quicker methods, such as the following:

- Comparing the data in Google Analytics with the data in your split testing tools to see if the numbers line up.

- Cross browser testing

- Visit your website from different computers in different areas for quality assurance

- Obsessively pay close attention to your test

41. Never stop testing.

You’ve just had a successful A/B split test, great! Now what do you do? Start another test. If something has worked great for you, why would you stop?

Conversion rate optimization is the best way to increase your number of sales or signups while keeping the same number of existing visitors. Plus, as you collect data over time, are able to better understand your visitors, and get to improve the effectiveness of your tests. A/B testing, done correctly, is one marketing practice that could yield you one of the highest ROI, so don’t stop after one or two test. Become consumed by it and always have a test running.undefined